Reality: Quantum computing is not ready for large-scale production. It is ready for serious, structured engagement, and the organizations that start now will be best positioned when the hardware crosses key thresholds.

Current quantum systems deliver real scientific and strategic value through pilot projects and co-design engagements. But they do not yet meet the operational bar that HPC centers apply to production workloads: repeatable results, auditable provenance, multi-tenant scheduling, and cost-predictable throughput. Acknowledging this honestly is the starting point for making good decisions about quantum investment.

At the same time, there is substantial value in working with today's hardware. Teams that gain hands-on experience with quantum problem formulation, algorithm design, and hybrid integration now are building skills with long lead times. Early experimentation positions organizations to capitalize quickly when fault-tolerant systems arrive, rather than scrambling to catch up.

Why This Myth Persists

Oversimplified metrics. Qubit counts and isolated demonstrations are easier to communicate than end-to-end time-to-answer and variance across production-like workloads. A headline about "1,000 qubits" tells you about capacity, not capability.

Category confusion. "Supremacy" and "advantage" experiments are often scientific milestones. They are not proof of broad enterprise readiness, and conflating the two leads to misaligned expectations.

Hype-driven procurement cycles. Budget windows and innovation mandates reward quick narratives. Nuanced readiness assessments are harder to fit into a slide deck, but they protect against expensive missteps.

What "Production-Ready" Actually Means in HPC Terms

If you are evaluating quantum hardware for your center, apply the same operational guardrails you use for any new accelerator:

Reliability. P95/P99 success rates and stability windows across shifts and maintenance cycles.

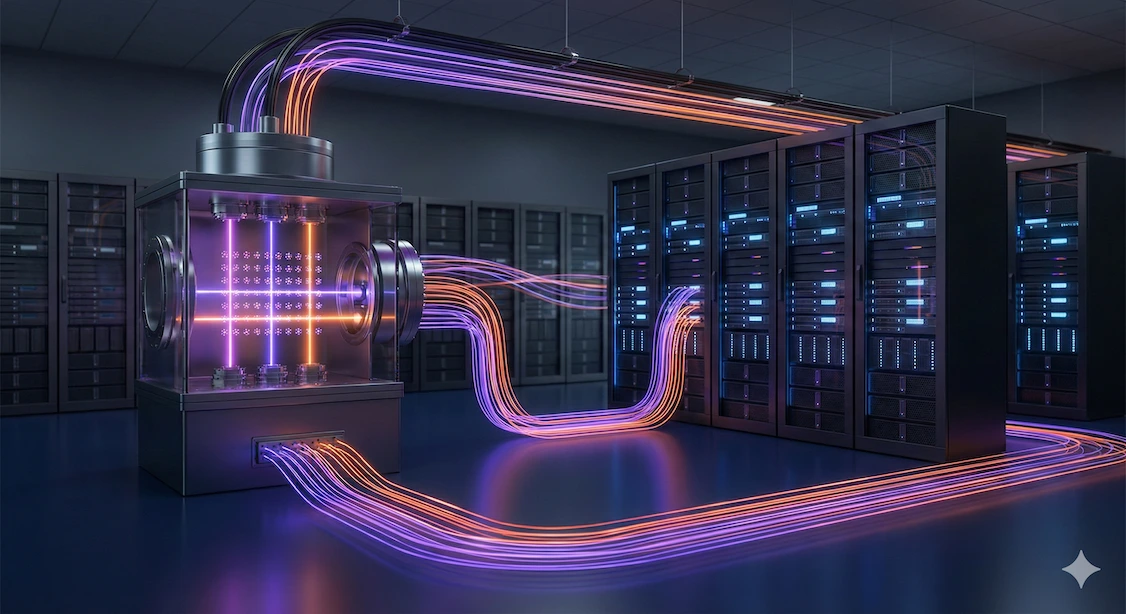

Performance under orchestration. Throughput and queue behavior when scheduled by Slurm or Kubernetes alongside CPUs and GPUs. Quantum must function as an HPC peer, not a standalone exotic system.

Reproducibility and auditability. Run-to-run consistency, provenance logging, and telemetry hooks that meet institutional standards for scientific computing.

Security and governance. Access control, tenancy isolation, data handling, and compliance alignment. For organizations subject to NIST 800-53, FedRAMP, CMMC, or export controls (ITAR/EAR), this is non-negotiable and often drives the decision between cloud and on-premises deployment.

Cost predictability. Cost per successful answer, including compilation, execution, error mitigation, and retries. Budget predictability at monthly and quarterly horizons matters for sustained programs.

Technical Constraints Worth Tracking

These are the factors that determine whether a quantum system can deliver useful results for your specific workloads:

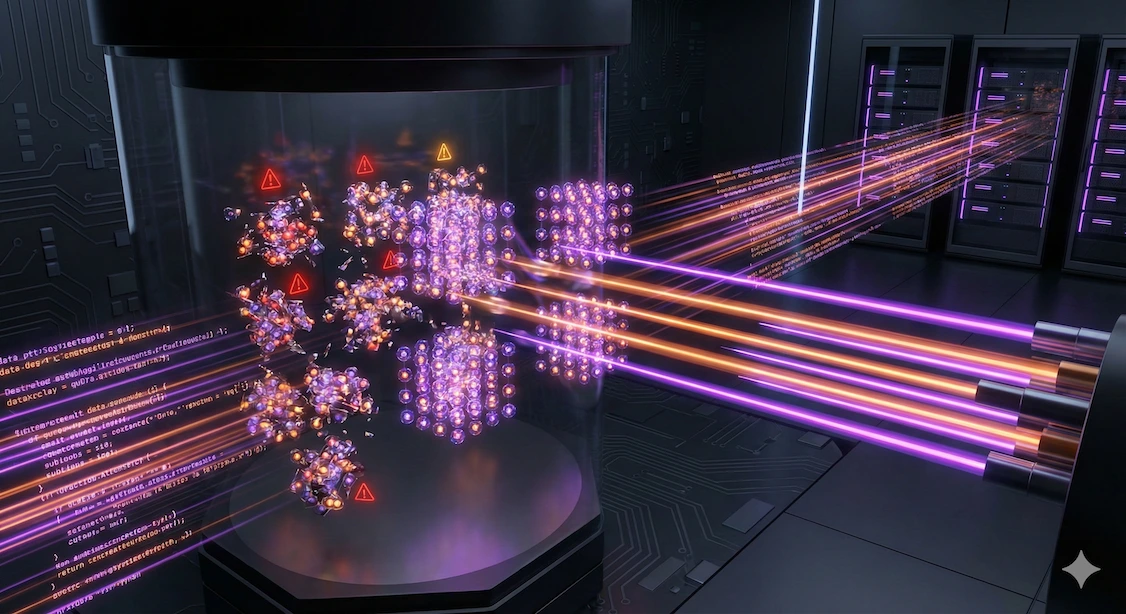

Qubit quality and coherence limit circuit depth and the size of problems you can meaningfully address. Raw qubit counts without fidelity context are incomplete at best.

Error rates and correction overhead. Error mitigation techniques help today but carry runtime and sample-count trade-offs. Full error correction works on a small scale, as recent demonstrations have shown, but remains resource-intensive. The transition from physical to a large number of logical qubits is the defining challenge of this era.

Connectivity and routing overhead. Extra operations to connect distant qubits add time and error. Architectures differ significantly here. Field-Programmable Qubit Arrays (FPQAs) and atom shuttling can dynamically reconfigure qubit connectivity to match problem structure, reducing routing penalties that fixed-topology systems must pay.

Calibration drift and uptime. Stability over hours matters for batch workloads. Ask vendors about uptime across an 8-hour window, not just peak performance snapshots. Room-temperature operation simplifies this challenge compared to systems requiring millikelvin cryogenic environments.

Compiler and control stack maturity. Placement, scheduling, and gate-level optimizations determine usable performance as much as the underlying hardware. A capable compiler can close the gap between raw hardware specs and delivered results.

What to Measure Instead of "Ready or Not Ready"

Binary assessments are not useful. Track these metrics against your specific problem instances:

Time-to-answer for your workloads, from queue to compile to execute to post-process.

Solution quality versus classical baselines, including variance and confidence intervals, not just best-case snapshots.

P95/P99 success rate and calibration-induced downtime over realistic operational windows.

Routing overhead: swap counts and added depth for your problem graphs. This is where architecture choices have outsized impact.

Hybrid efficiency: data movement latency, kernel granularity, and orchestration overhead between quantum and classical resources.

Cost per successful run and budget predictability at program-relevant horizons.

How to Read Common Claims

"Thousands of qubits." Capacity, not capability. Ask for depth at target fidelity, routing overhead for representative circuits, and end-to-end metrics on problems that matter to you.

"High fidelity." Request variance and stability over an 8-hour window, not a single cherry-picked number. Fidelity that degrades under sustained workloads is not production fidelity.

"Utility/advantage demonstration." Clarify task relevance to your domain, the classical baseline used, sample complexity, and net time-to-answer including all overhead.

"Error mitigation replaces error correction." Mitigation is useful today, but carries runtime and sample-count trade-offs that compound with circuit size. Quantify total cost and accuracy before relying on mitigation as a long-term strategy. The industry's trajectory is toward fault-tolerant error correction, and your roadmap should account for that transition.

What Is Genuinely Useful Today

Quantum computing is not ready for production, but it is ready for structured engagement that builds real organizational capability.

Algorithm co-design. Identify which problem encodings and heuristics survive current noise and topology constraints. This informs future model choices and ensures your organization is not starting from scratch when fault-tolerant hardware arrives. Structured co-design engagements, where domain scientists partner with quantum specialists, consistently produce more actionable results than unsupported hardware access alone.

Workforce development and quantum literacy. Train teams on quantum problem formulation, kernel design, and hybrid integration. These skills have long lead times, and starting now provides a durable competitive advantage. Open-source tools like QuEra’s Bloqade let teams experiment with emulators before committing to hardware.

Hybrid workflow patterns. Establish scheduling, telemetry, and data governance patterns so quantum becomes a manageable resource class within your existing HPC infrastructure. The integration work you do today, connecting quantum processing units to your CPU/GPU pipelines through standard job schedulers, transfers directly to next-generation systems.

Targeted domain applications. Physics-inspired simulations, certain optimization heuristics, and sampler/estimator subroutines where the hardware's native strengths align with the workload. Focus on problems where quantum's structure matches your problem's structure, rather than forcing arbitrary workloads onto quantum hardware.

Bottom Line

Quantum computing is not ready for immediate large-scale production. We are direct about that because credibility matters more than hype.

What quantum computing is ready for: serious, structured engagement that builds the skills, workflows, and organizational readiness your team will need when fault-tolerant systems arrive. Measure with production-level KPIs. Integrate thoughtfully with your HPC stack. Invest in people and processes now.

The organizations that treat quantum as a strategic capability under active development, rather than waiting for a binary "ready" signal, will be the ones positioned to move fastest when the hardware crosses key thresholds. That moment is closer than many realize, and preparation time is finite.

.webp)

.webp)