Why This Matters To HPC Centers

HPC centers live and die by reliability. Classical supercomputers deliver reproducible, verifiable results. Quantum error correction (QEC) is what bridges the gap between noisy quantum experiments and the kind of dependable computation HPC users demand. The recent burst of QEC breakthroughs represents a shift from proof-of-concept demonstrations to scalable engineering approaches that directly affect timelines for when quantum resources become genuinely useful in production HPC environments.

The Evidence Base

▸ “Quantum computers will finally be useful: what’s behind the revolution” (Nature)

▸ "Quantum Simulations Become 100x More Efficient With New Error Bound" (Quantum Zeitgeist)

▸ “Understanding Fault-tolerant Quantum Computing” (QuEra)

▸ "Researchers Demonstrate Fault-Tolerant Quantum Operations Using Lattice Surgery" (The Quantum Insider)

▸ "QuEra Unveils Breakthrough in Algorithmic Fault Tolerance" (QuEra)

▸ "Paul Scherrer Institute and ETH Zurich Demonstrate Fault-Tolerant Lattice Surgery" (QCR)

Details

Quantum error correction (QEC) has long been the field's most critical bottleneck. Multiple independent research groups have demonstrated fault-tolerant operations, new QRC code designs, and efficiency breakthroughs that compress the resource overhead required for reliable quantum computation. For HPC centers, these advances directly affect planning timelines: fault-tolerant quantum computing is becoming an engineering problem with measurable, accelerating progress.

The lattice surgery demonstrations from PSI/ETH Zurich and from superconducting qubit teams represent an important milestone. Lattice surgery is a candidate technique for performing logical operations between error-corrected qubits at scale, and successful demonstrations on real hardware validate the theoretical frameworks that quantum computing roadmaps depend on. Meanwhile, new error bound techniques have achieved 100x efficiency improvements in quantum simulation. This is the kind of step-change that reshapes what is computationally feasible.

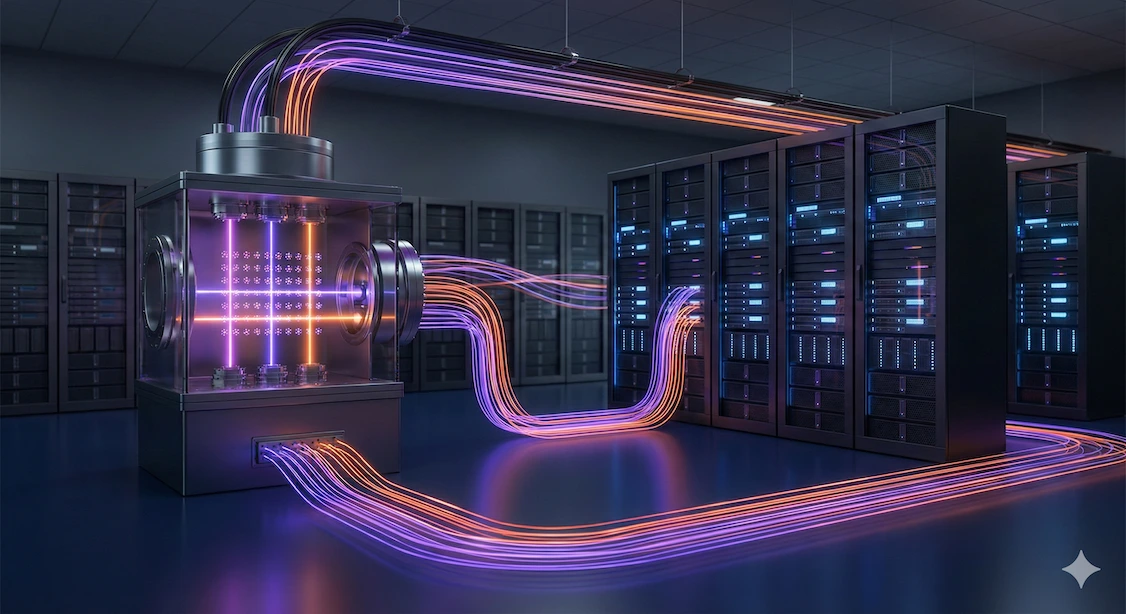

For HPC centers, the practical takeaway is that the path to useful quantum computation runs through error correction, and that path is being paved faster than many expected. Advances in qLDPC codes, bosonic codes, phantom codes, and improved decoders all contribute to reducing the physical-to-logical qubit overhead. This, in turn, reduces the scale of quantum hardware needed to tackle real scientific problems. Centers should be tracking these developments closely because they directly determine when quantum will cross the threshold from "interesting experiment" to "production tool."

The diversity of QEC approaches also matters strategically. HPC centers do not need to bet on a single error correction paradigm. But they do need staff who understand the landscape well enough to evaluate vendor claims and assess when quantum resources will be reliable enough to integrate into mission-critical workflows.

Learn more about QuEra’s Quantum Error Correction approach here.

.webp)

.webp)