The integration of quantum computers into High-Performance Computing (HPC) environments is not a singular event—it is an evolution. As we move from experimental racks to enterprise-scale solutions, the conversation has shifted from if quantum fits into the data center, to how it integrates with existing orchestration, from One Day to Day One.

Recent discussions with industry leaders and infrastructure partners have highlighted a critical reality: we cannot treat quantum resources as immediately interchangeable with typical HPC nodes. Instead, we must adopt a staged approach—a "Horizon Planning" strategy—to bridge the gap between current infrastructure and the fault-tolerant future.

Here are the three distinct horizons of Quantum/HPC integration.

Horizon 1: The Software Handshake

Focus: API Stability and Job Scheduling

The first phase is not about deep hardware entanglement, but rather establishing the lines of communication. Currently, most research centers and national labs are not yet ready to treat quantum resources as interchangeable commodity hardware.

In this "Early Access" horizon, the priority is software integration.

- The Goal: Develop stable APIs and plugins (such as for SLURM or PBS Pro) that allow classical systems to "see" the quantum resource.

- The Reality: The quantum computer operates largely as a distinct entity. The HPC manager receives availability schedules for job scheduling and execution tracking, but the workflows remain loosely coupled.

- Key Challenge: Defining the "who" (the customer) and the "how" (orchestration). This phase is about learning to walk before we run, ensuring that job submission protocols are robust before attempting complex hybrid workflows.

During this phase, HPC teams validate how the quantum system exposes resources—queues, job states, error codes, and utilization metrics—through familiar scheduler interfaces. The emphasis is on predictable behavior: making sure a quantum job can be submitted, tracked, and recovered with the same reliability expectations as any specialized accelerator.

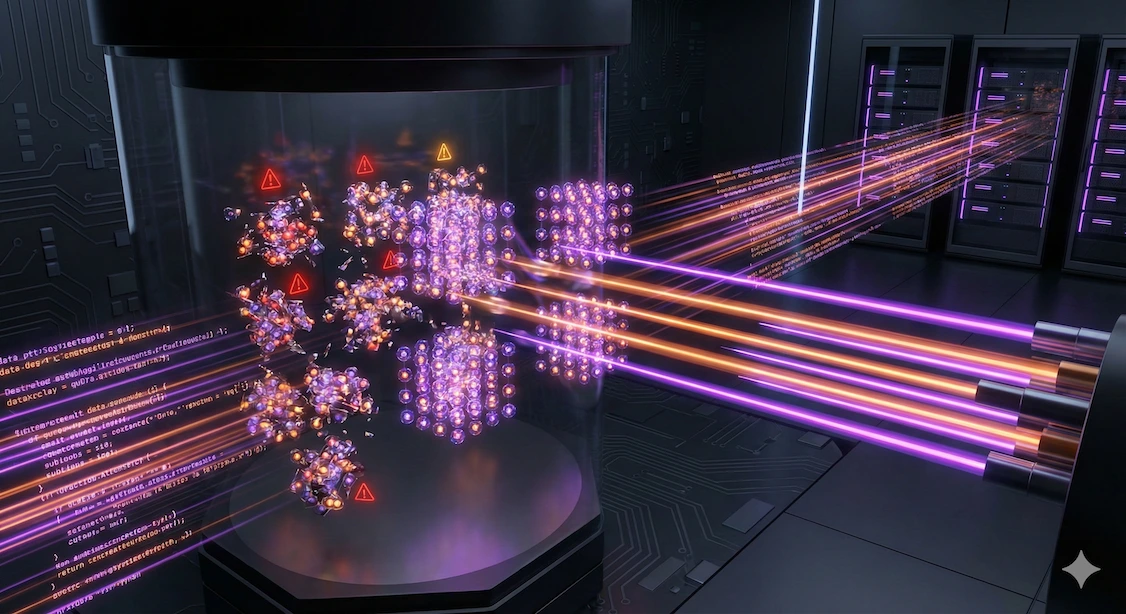

Horizon 2: The Hybrid Loop

Focus: Variational Algorithms

As the orchestration layer stabilizes, we move into the era of true hybrid utility. Horizon 2 is defined by the execution of hybrid quantum-classical algorithms, specifically Variational Quantum Eigensolvers (VQE) and the Quantum Approximate Optimization Algorithm (QAOA).

- The Shift: The quantum computer moves from a scheduled oddity to a co-processor.

- The Workflow: This phase introduces iterative loops where classical data is processed alongside quantum operations. The latency between the two systems could become an important metric.

- The Strategy: HPC centers begin to test the limits of "reserved hours" vs. "shared resource" policies, learning how to manage workflows when a single job—like a chemical simulation or optimization problem—requires rapid back-and-forth communication between classical and quantum processors.

In this phase, HPC teams begin characterizing how quantum-classical latency impacts real workflows—mapping where batching, circuit compilation caching, and parameter updates must occur to maintain throughput. The priority is establishing repeatable hybrid loops that behave predictably under load, allowing HPC schedulers to treat quantum calls as dependable subroutines rather than unpredictable external events.

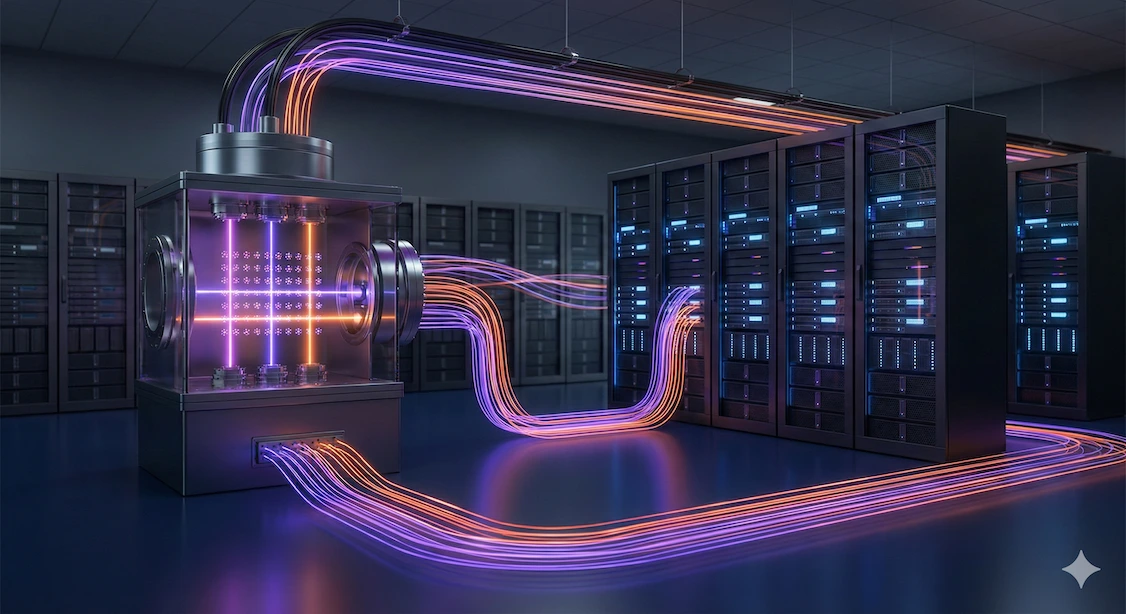

Horizon 3: The Fault-Tolerant Symbiosis

Focus: FTQC and Deep Data Integration

The final horizon represents the holy grail of integration: Fault-Tolerant Quantum Computing (FTQC). In this stage, the relationship between the supercomputer and the quantum computer inverts and intensifies.

- The New Dynamic: The quantum computer is no longer just a job recipient; it also becomes a massive consumer of HPC resources.

- Coherent Data Integration: A key requirement in this phase is the integration of large-scale classical data directly into the coherent calculation of the quantum computer. The system must support long-term coherent computation where classical data inputs interact dynamically with quantum states.

- Real-Time Support: Additionally, classical HPC resources must perform real-time calculations to handle Quantum Error Correction (QEC) and calibration to keep the quantum system stable during the computation. This requires a seamless, ultra-low-latency integration where neither machine can function effectively without the other.

At this stage, the classical environment becomes inseparable from quantum execution, with HPC clusters supplying continuous streams of decoding, routing, and calibration computations required for large-scale error correction. The focus shifts toward ensuring sustained, ultra-low-latency data exchange and guaranteeing that both systems can co-schedule long-running, interdependent workloads without violating coherence or throughput requirements.

The Path Forward

Integrating quantum into the data center is a journey of translation. It requires translating the needs of HPC managers—who care about utilization, queues, and uptime—into the language of quantum physics.

By viewing this evolution through these three horizons, infrastructure providers can move beyond the hype and start building the specific plugins, APIs, and scheduling policies required for today, while architecting the deep integrations needed for the fault-tolerant systems of the future.

.webp)

.webp)