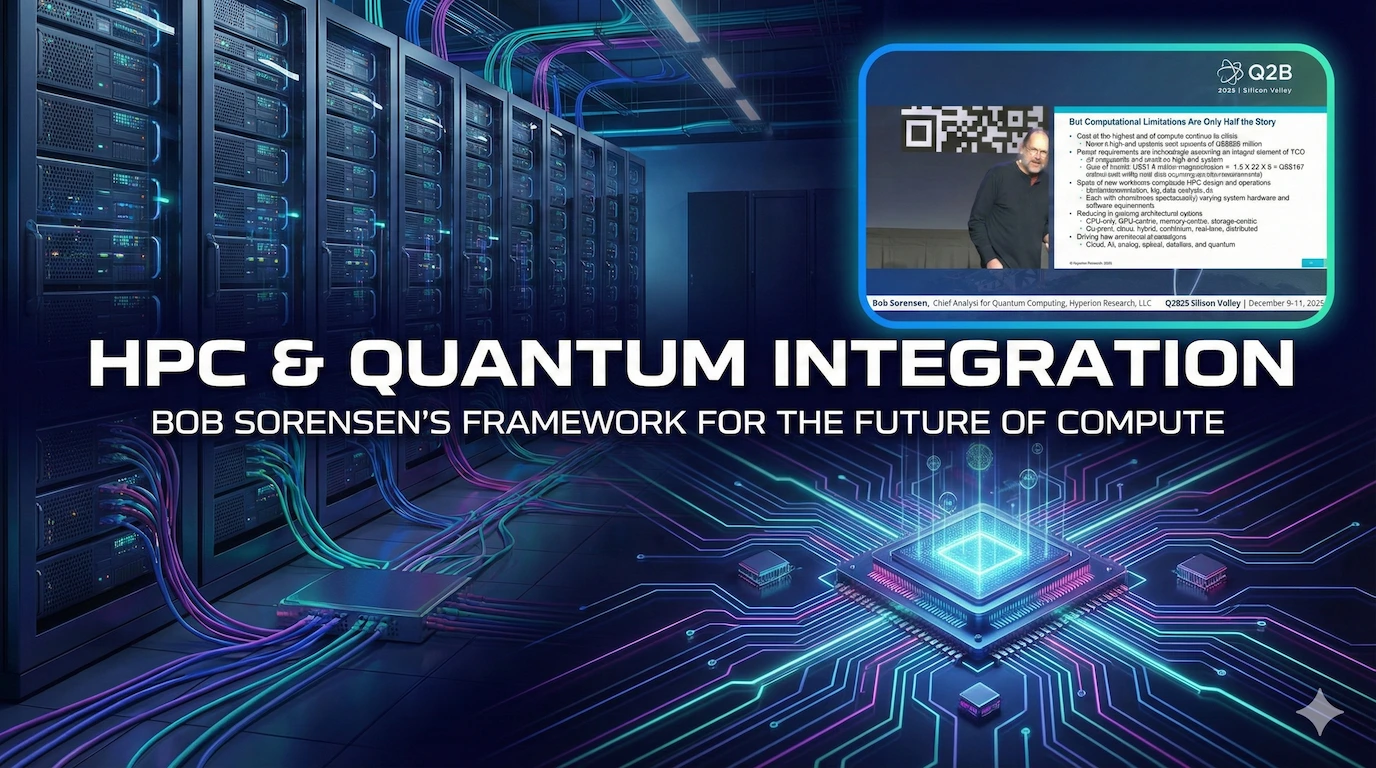

Opinion from Tommaso Macri following the 2025 PEARC conference:

For two decades, GPUs transformed high-performance computing by slipping into existing workflows as specialized accelerators. They didn’t replace CPUs; they augmented them. Quantum computing should follow the same path—integrating as an accelerator inside familiar HPC pipelines—only this time on a compressed timeline. These recommendations draw on discussions at the PEARC’25 2nd Workshop on Broadly Accessible Quantum Computing, held in Columbus, OH, on July 21, 2025.

Start with integration, not isolation.

Quantum processing units (QPUs) should be scheduled, monitored, and billed like any other accelerator. That means explicit support in job schedulers and resource managers, plus clear patterns for hybrid jobs that pass state between classical and quantum stages. Treat quantum as a first-class resource type within HPC centers and cloud gateways.

Standardize the software path early.

The GPU era only hit its stride once programming models stabilized and portable abstractions emerged. Quantum needs an open, modular stack now: common intermediate representations, device-agnostic APIs, and containerized toolchains that build once and run across hardware modalities. Just as important are dev tools HPC teams actually use: debuggers, profilers, reproducible environments. If scientists can’t reproduce or debug a quantum-accelerated run, they won’t use it.

Fund the “boring” parts of the stack.

Breakthrough qubits make headlines; schedulers, simulators, and visualization tools make adoption possible. Public programs should prioritize these connective layers: queueing policies that account for shot budgets and latency; simulators that let teams develop locally before consuming scarce QPU time; visualization that exposes noise, fidelity, and cost to users. The return is multiplicative: every app team benefits, regardless of hardware choice.

Co-design with real applications.

GPUs won when kernels, libraries, and compilers co-evolved with hardware. Quantum needs the same: domain libraries for optimization, chemistry, and materials; compilers that handle layout, noise, and calibration; and benchmarks tied to end-to-end outcomes, not just qubit counts. The metric that matters is speedup or capability gain in a production-relevant workflow.

Build the workforce that bridges worlds.

HPC centers became GPU powerhouses because systems engineers, research software engineers, and domain scientists learned to collaborate. For quantum, invest in technician training, control-systems talent, and hybrid algorithm developers. Short, credentialed pathways can upskill existing HPC staff—people who already understand schedulers, containers, and performance engineering.

Accelerate procurement and policy.

Align funding and procurement with the accelerator model. Support on-prem testbeds attached to supercomputers and cloud-accessible devices behind common interfaces. Require portability and transparent benchmarking in solicitations. Encourage multi-vendor experimentation to avoid lock-in and spur toolchain maturity.

By embedding quantum inside the HPC fabric where data already lives and workflows already run, we turn prototypes into platforms. The GPU playbook shows the way: make integration easy, performance transparent, software boring (in the best sense), and empower the people who keep systems running. Do that now and quantum becomes an indispensable accelerator that quietly changes what’s possible.

.webp)

.webp)