QuEra's path from logical-qubit demonstrations to fault-tolerant quantum computing

Two years ago at Q2B, 48 logical qubits made headlines. The quantum computing community celebrated what seemed like a major milestone—and it was. But milestones are waypoints, not destinations.

At this year's Q2B Silicon Valley, the message from QuEra was different: demonstrations are behind us. The fundamental building blocks for large-scale fault-tolerant quantum computing have been proven. Now the work shifts from "can we?" to "let's go."

Nature Doesn't Have Manufacturing Defects

The case for neutral atoms starts with physics. Take a billion rubidium atoms—a billion potential qubits—and they'll be perfectly identical. No manufacturing defects. No factory strikes. No calibration drift between units. Nature solved the quality control problem billions of years ago.

These atoms are grabbed using optical tweezers and exhibit what the field calls all-to-all connectivity: any qubit can travel and interact with any other qubit. Laser light can illuminate pairs of qubits—or multiple pairs simultaneously—delivering efficiency gains that compound as algorithms grow longer.

But the scaling story is what catches the attention of HPC center operators.

Unlike architectures where control signals, complexity, and cost scale linearly with qubit count, neutral atoms scale logarithmically. Much larger systems require only incrementally more control infrastructure. And cryogenics? Not required.

When QuEra visits supercomputing centers and mentions that current systems draw about 10 kilowatts—with next-generation systems perhaps reaching 20—the reaction is consistent: "Kilowatts? What's a kilowatt? I know what a megawatt is."

No cryogenics. Low power. Compact footprint. These aren't just technical advantages; they're deployment realities. QuEra isn't building national monuments. They're building systems that can be installed in any reasonable data center around the world.

Quantum Put to Work

The technology has been running on Amazon Braket for three years now—130 hours per week of availability. That operational maturity creates space for real applications.

Deloitte has used the systems for defect classification. Moody's explored weather forecasting. Amgen and Merck investigated drug discovery prediction.

The pharmaceutical work illustrates an emerging use case. Clinical trial data is sometimes abundant—think COVID vaccines—but often scarce when diseases are rare or treatments expensive. Working with Amgen, Merck, and Deloitte, the team demonstrated that quantum machine learning can extract insights from smaller datasets faster than classical approaches.

Intesa Sanpaolo in Italy has explored credit card default risk modeling. And DARPA's selection of QuEra for Stage B of the Quantum Benchmarking Initiative provides external validation that neutral atoms merit serious attention.

But none of this matters without quantum error correction.

The Error Correction Imperative

Without error correction, quantum computing remains an academic exercise. Today's best single-qubit operations have error rates around one in a thousand. That sounds precise—until you consider algorithms with a million steps. At those scales, you're essentially guaranteed garbage output.

Error correction changes the equation entirely. With it, calculations can run longer. And longer is exactly what's required to deliver genuine value.

QuEra, Harvard, and MIT have been systematically proving and improving quantum error correction on neutral atoms. The progress over the past two years has been methodical and increasingly consequential.

A foundational paper two years ago demonstrated that logical qubits and error correction actually work on neutral-atom machines. Follow-up work proved universality by creating high-quality magic states (T states) on logical qubits—a requirement for universal quantum computation.

Then came the scaling analysis. A recent paper showed that mehods unique o neutral-atom could shorten algorithmic execution time by roughly 50x. For instance, for one algorithm this translated going from about a year to approximately five days.

Additional work has optimized the space and time requirements for error correction itself—how many rounds are needed, which codes perform best, where efficiencies can be found. We continue to make progress.

The Zoned Architecture

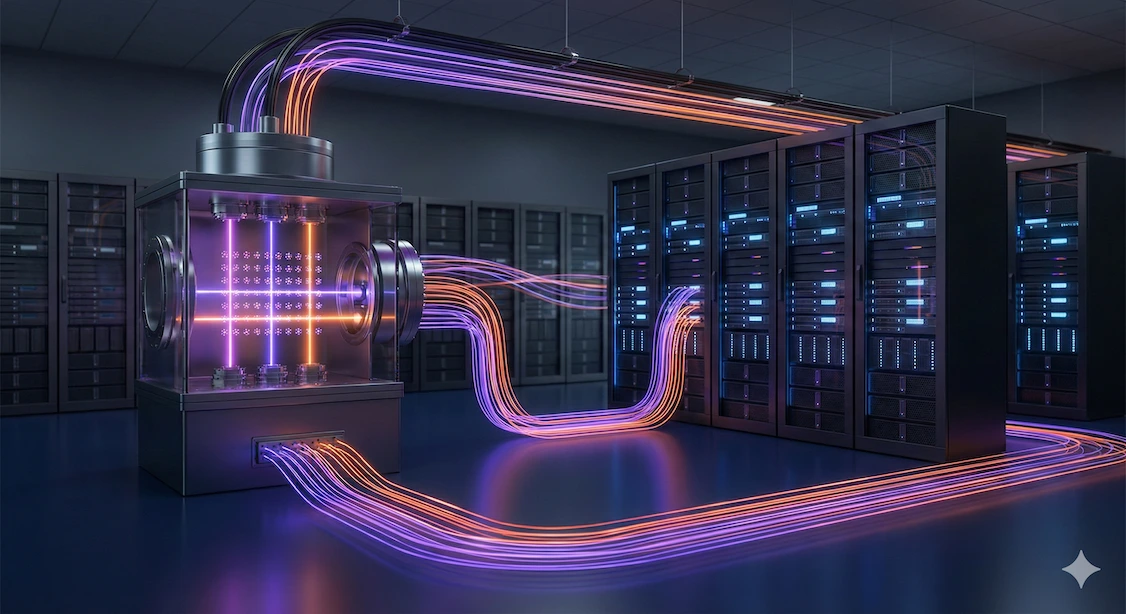

What's emerged is what QuEra calls the zoned architecture—the quantum equivalent of von Neumann architecture for classical computers.

In classical computing, data moves from memory to CPU registers, gets processed, and returns to memory. The quantum analog has a storage zone for qubits, shuttling mechanisms to move them, an entanglement zone for operations, and readout capabilities.

A recent Harvard paper demonstrated continuous operation of 3,000 qubits. This addresses a fundamental challenge in neutral atoms: atoms sometimes escape. Without replenishment, you're singing the beer song—100 atoms on the wall, one fell, now 99, now 98, eventually zero.

The breakthrough shows fresh atoms being loaded continuously while operations proceed. The system ran for hours, enabling genuinely long calculations with maintained qubit populations.

Three thousand atoms. It was only a few years ago when every qubit had a name. "This is Joe, this is Sam, this is David. We're entangling Joe and David." Now, suddenly, 3,000. Names no longer apply.

A companion paper demonstrated the full architecture for fault-tolerant quantum computing: reloading, continuous error correction rounds, atom cleaning. These are foundational capabilities, not incremental improvements.

The Airline Analogy

Customers ask: with all this progress, are we there yet?

The honest answer: not yet. But the honest context matters too.

Think of the journey from Boston to Santa Clara. You can't do it in one flight with early aircraft. You refuel in Buffalo, then Chicago, then Omaha. Each stop invites distraction—a museum, a ball game, a detour. Progress feels incremental.

But at some point you realize you've reached Nevada. Metaphorically, the destination is now within range without refueling. You can drive direct.

This is where neutral-atom quantum computing stands. The 2025 milestones have demonstrated the fundamental building blocks. The core architecture is validated. The destination—large-scale fault-tolerant quantum computing—is now achievable without stopping.

Beyond Demonstrations

The framing has shifted. Two years ago, 48 logical qubits generated excitement. That excitement was warranted. But logical-qubit demonstrations are no longer the benchmark.

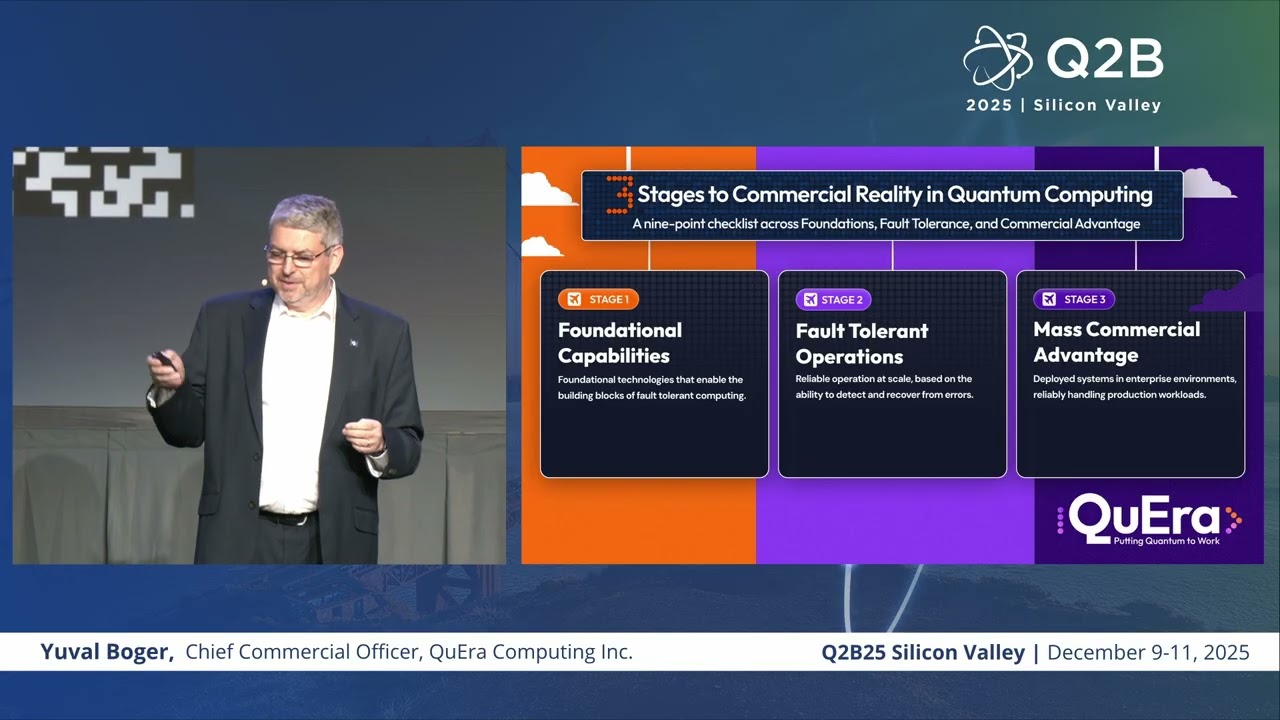

What matters now is the progression through three stages: foundational capabilities, fault-tolerant operations, and mass commercial advantage.

Foundational capabilities ask: Do you have stable, accurate qubits? Can you perform high-quality adaptive operations? Fault-tolerant operations ask: Are your logical qubits actually better than physical qubits? Can you scale them? Can you achieve universality?

And commercial advantage asks the hardest questions: Can you demonstrate calculations impossible classically? Do you have production experience? Is deployment practical—or does it require a football field, a billion dollars, and 100 megawatts?

QuEra's answers are grounded in operational reality. Three years on Amazon Braket. 130 hours per week. 10-20 kilowatt systems that fit in standard data centers. A deployment in Japan at AIST. And a partner ecosystem—the QuEra Quantum Alliance—spanning quantum chemistry, machine learning, optimization, and consulting.

The Next Twelve Months

The conviction is clear: within the next twelve months, the scientific leadership position will be undeniable, and the path to genuinely useful quantum machines will be visible to anyone paying attention.

The work now isn't about proving feasibility. It's about engineering products. It's about integrating controls and management systems so users can derive actual business value. It's about partnerships with non-overlapping competencies and global distribution capability.

Hardware companies that both pioneer technology and build global go-to-market operations face a difficult challenge. Software scales easily; physical products do not. That's why partnerships matter—allowing QuEra to focus on building the most impactful quantum computers while others help deploy them.

The roadmap details will come in early 2026: qubit counts, timelines, engagement models. But the direction is already clear.

The demonstrations proved the architecture. The error correction validates the approach. The scaling shows the path.

The future of fault-tolerant quantum computing isn't around some distant corner. It's the next leg of a journey already well underway.

Watch the full video here:

.webp)