Every major computing paradigm has had a moment when incremental progress stopped working.

For CPUs, it was the end of single-thread scaling.

For GPUs and AI, it was the realization, led by NVIDIA, that performance would no longer come from faster chips alone, but from rebuilding the entire stack around a new computational model.

Fault-tolerant quantum computing is approaching its own version of that moment.

At QuEra, we study NVIDIA's trajectory not because quantum systems resemble GPUs, but because NVIDIA successfully navigated the hardest part of a paradigm shift: moving from promising hardware to a durable, scalable platform.

NVIDIA is also an investor in QuEra; a signal, perhaps, that they see parallels worth backing. But this post isn't about that vote of confidence. It's about what we've learned from studying how they built theirs.

To understand these themes, we looked at multiple recent appearances of NVIDIA’s CEO, Jensen Huang. We distilled a small set of ideas Jensen returns to consistently. We treat these not as predictions, but as design signals: principles that shaped the last major computing platform and help inform how the next one may be built.

Lesson 1: Paradigm Shifts Are Built, Not Assembled

One of Jensen Huang’s most consistent messages is that modern computing systems cannot be assembled from loosely coupled parts.

“You can’t just design chips and hope that things on top of it go faster... We call it extreme co-design.” - Jensen Huang, GTC Washington, D.C. Keynote [4]

“The breakthroughs don’t come from optimizing one piece. They come from redesigning the system.” - Jensen Huang, Vision for the Future [3]

NVIDIA learned this the hard way. GPUs did not become dominant because they were faster graphics chips. They became dominant because NVIDIA rebuilt the stack: programming models, libraries, system architectures, around accelerated computing.

QuEra applies the same discipline to FTQC.

Our systems are not designed around raw qubit count, with error correction deferred to later generations. Instead, fault tolerance is the organizing principle of the hardware itself. Physical layout, control mechanisms, atom transport, measurement cadence, and classical feedback are all designed around executing quantum error correction efficiently.

This is the FTQC equivalent of NVIDIA’s CUDA moment: committing early to a structure that enables scale later.

Lesson 2: Energy Is a Constraint That Shapes Architecture

Jensen consistently discusses energy as a top level contstraint to solve for.

“Performance without efficiency doesn’t scale.” - Jensen Huang, 2026 CES Keynote [1]

“If you don’t solve energy efficiency, you don’t get to scale intelligence.” - Jensen Huang, Joe Rogan Experience [5]

AI factories exist only because NVIDIA drove orders-of-magnitude improvements in performance per watt. The same logic applies to FTQC.

With error correction, workloads multiply. Systems that require extreme cooling or megawatt-scale infrastructure may function scientifically, but they fail economically and operationally.

At QuEra, energy efficiency is a first order design principle. QuEra’s neutral-atom systems operate at room temperature and have favorable power scaling characteristics today (<12 kWh per system), not as a future optimization. This is a direct consequence of treating deployment realism as a design constraint from the start—another lesson drawn from NVIDIA’s experience scaling compute into real data centers.

Lesson 3: The Future of Compute Is Hybrid, by Necessity

Finally, Jensen is explicit that new computing paradigms do not replace old ones; they integrate with them.

“Quantum computers won’t replace classical systems. They will work together.” -Jensen Huang, GTC Washington, D.C. Keynote [4]

“Quantum needs enormous classical compute just to function.” - Jensen Huang, Joe Rogan Experience [5]

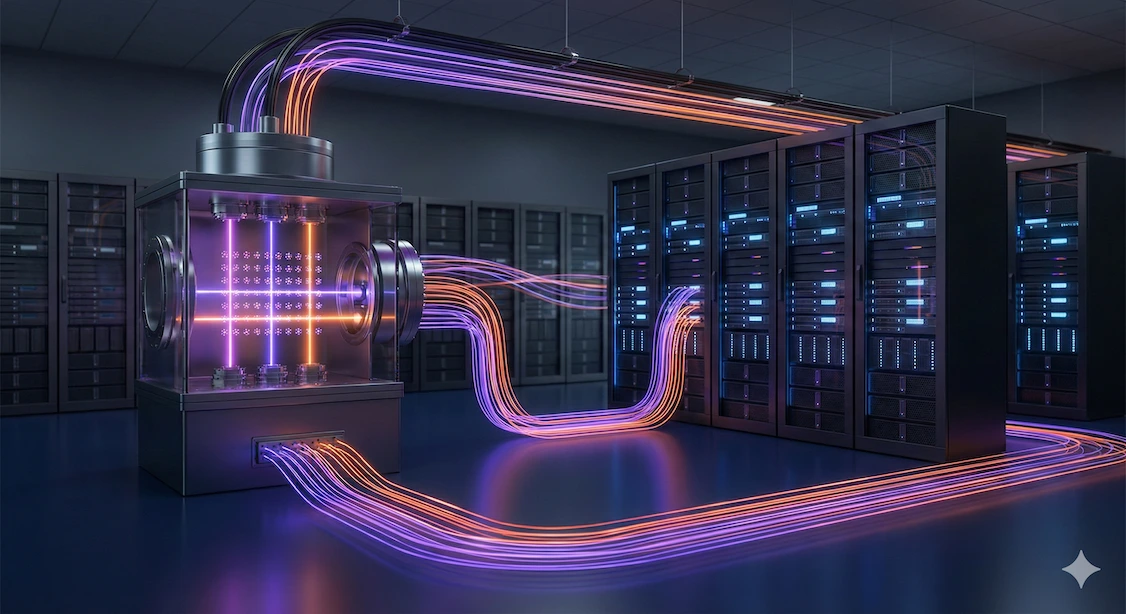

QuEra’s FTQC systems are designed as accelerators within HPC environments, not standalone machines. Classical GPUs and CPUs handle decoding, control, and hybrid algorithm execution. Tight coupling, not loose orchestration, is the north star in our computing roadmap.

This mirrors NVIDIA’s own architectural philosophy: the unit of computing is no longer a chip, but a system.

Why We Take These Lessons Seriously

NVIDIA didn't win because GPUs were faster. It won because it recognized before competitors that accelerated computing required a new kind of system, not just a new kind of chip.

Quantum computing faces the same test. The companies that treat fault tolerance as a feature to add later will find themselves optimizing for benchmarks that don't matter. The companies that treat it as the organizing principle - of hardware, software, and deployment - will build the systems that actually get used.

QuEra's bet is that the FTQC era rewards those who commit to it now, not those who wait for certainty.

We're not waiting.

.webp)