At Q2B 2025, Bob Sorensen of Hyperion Research delivered a presentation that should be required viewing for anyone managing an HPC center or planning enterprise compute strategy. Drawing on decades of experience advising the high-performance computing sector, Sorensen outlined an eight-step framework for organizations preparing to integrate quantum computing into their infrastructure.

What made his talk particularly compelling was the reception it received at SC25 in St. Louis just weeks earlier. As Sorensen described it: "Those sessions were all packed - rooms the size of this one were standing room only. The HPC sector is very, very interested."

The HPC community has moved past curiosity. As Sorensen put it: "They have reached the point where they understand, they've heard the message: utility-class quantum computing is on the way, things are going to start to happen, and the time to prepare is now."

As a company that has deployed neutral-atom quantum computers alongside classical supercomputers, we found Sorensen's framework both validating and instructive. Here is what he shared, along with our perspective on what it means for organizations charting their quantum journey.

The HPC Sector's Inflection Point

Sorensen opened with context that explains why quantum is gaining urgency. The numbers are sobering.

During HPC's heyday, every decade delivered nearly three orders of magnitude in performance improvement. That pace has slowed to roughly one to one-and-a-half orders of magnitude per decade. As Sorensen bluntly assessed: "We've had roughly the same HPC architecture for the last 15 years, and it's running out of steam."

Average system age on the Top500 list is climbing. Machines that first appeared in 2017 remain on the list today. Only 49 new systems joined the Top500 this year, less than 10% of the total.

Perhaps most striking is the concentration at the top. Sorensen illustrated: "If you draw a line through the list so that half the aggregate performance is above and half is below, in the old days the top 100 machines had performance equal to the bottom 400. Today, it's the top eight versus the remaining 492."

The implication: "We now have a bifurcated advanced computing sector: a handful of enormously powerful, expensive machines, and everyone else running much more modest enterprise-class systems."

Cost and power compound the challenge. Recent flagship systems cost approximately $600 million each. A 25-megawatt system adds roughly $200 million in power costs over five years. As Sorensen noted: "These are available only to the largest U.S. government programs. No one else spends that kind of money."

At the same time, workloads are diversifying. Traditional modeling and simulation remain foundational, but big data analytics and AI are growing. This diversity is driving interest in new architectural paradigms: GPU-centric systems, memory-centric designs, cloud supplements, and quantum.

QuEra's view: This is precisely why we designed our systems for HPC integration from the start. The AIST deployment in Japan, where our Gemini-class system is installed alongside 2,000+ NVIDIA H100 GPUs via CUDA-Q. Room-temperature operation matters when power and floor space are already constrained.

Why HPC Organizations Are Looking at Quantum

Sorensen's survey data revealed that organizations cite multiple motivations for quantum exploration. On average, respondents selected more than three reasons. As he summarized: "We count quantum as one more tool in the toolbox."

The most common motivation is implementing new algorithms not possible on classical systems. But over half also expressed concern about the future performance trajectory of classical computing. They are hedging against architectural limits.

Other motivations include exploring relevant use cases without expecting near-term advantage, and growing cost sensitivity driven by AI and GPU expenses. Total cost of ownership matters more than ever.

When Sorensen asked suppliers where they see the greatest end-user opportunities, computational chemistry and quantum technology R&D topped the list, followed by pharmaceuticals, academia, finance, government labs, and cybersecurity.

QuEra's view: This opportunity map aligns closely with where neutral-atom quantum computing is expected to deliver the most immediate value. Our analog mode has tackled optimization problems that appear across logistics, materials science, and network design. Our gate-based digital mode systems will addresses the electronic structure calculations central to computational chemistry and drug discovery. The published results from our collaboration with Merck, Amgen, and Deloitte on pharmaceutical applications demonstrate this alignment is not theoretical.

The Number One Hurdle: Integration

When Sorensen asked about barriers to adoption, one answer dominated: integration. As he put it emphatically: "You can't just drop off a box at the loading dock. Integration into existing HPC environments is everything."

Other concerns included lack of in-house quantum expertise, difficulty demonstrating ROI, and budget justification. Interestingly, only 8% of respondents said they have no new computational requirements, "meaning 92% have unmet needs today."

QuEra's view: We hear this from every HPC center we engage with. Integration is not a technical afterthought; it is the primary design constraint. This is why our partnership with leaders like Dell Technologies focuses on orchestration within classical infrastructure. It is why our NVIDIA collaboration ensures CUDA-Q compatibility for hybrid workflows. And it is why we invested in a software stack that integrates with standard job schedulers like SLURM.

Dr. Travis Humble at Oak Ridge National Laboratory captured the challenge precisely: "The technology, the mindset around quantum computers is not obviously compatible with high-performance computing technologies, tools and culture." We agree. Bridging that gap requires deliberate engineering, not hope.

Sorensen's Eight-Step Framework

With context established, Sorensen walked through the adoption process his firm recommends. Each step contains hard-won wisdom.

Step 1: Look Inward First

Sorensen's first recommendation may surprise: "Don't talk to quantum vendors yet." Instead, characterize your existing workloads. Identify which jobs matter most by compute time, user importance, or return on investment. Define your pain points. Is time to solution too slow? Are jobs too large? Have you hit algorithmic limits? Are power costs unsustainable?

Once you identify pain points, develop a plan to address them classically. Consider cloud, on-premises upgrades, heterogeneous architectures, and cost optimization. As Sorensen noted: "Even if you never adopt quantum, this exercise has value." The resulting analysis becomes your baseline against which quantum can be evaluated.

QuEra's view: This is excellent advice that too few organizations follow. When we engage in algorithm co-design with customers, the first phase is always a needs assessment that characterizes existing workloads and identifies where quantum might provide leverage. Without a classical baseline, there is no way to measure quantum value. Our co-design collaborations exemplify this approach: rigorous characterization of workloads before determining which are candidates for quantum acceleration.

Step 2: Build Internal Support

Next, begin building in-house support for quantum exploration. Identify internal champions. These are often rank-and-file researchers or HPC staff, not people with "quantum" in their job titles. Give them access to cloud platforms, conferences, and low-cost experimentation. Establish limited dialogue with vendors. The goal is groundwork, not procurement.

QuEra's view: Cloud access has been essential for exactly this purpose. Our availability on Amazon Braket since 2022 has enabled thousands of researchers to experiment with neutral-atom quantum computing without significant procurement overhead. For organizations ready for deeper engagement, our Premium Cloud Access tier provides reserved capacity and direct support from QuEra scientists. The goal is reducing friction for learning.

Step 3: Engage Leadership

At this point, engage leadership. But Sorensen offered pointed advice: "Send the CIO, CTO, or CFO, not the quantum expert. This is about ROI, not qubits."

QuEra's view: This resonates strongly. The executives we work with do not want tutorials on Rydberg blockade physics. They want to understand timeline to value, integration requirements, staffing implications, and total cost of ownership. Our partnerships with Deloitte and BCG X exist precisely to translate quantum capability into business cases that resonate with leadership.

Step 4: Explore Algorithmic Opportunities

Only after these foundational steps should organizations seriously explore algorithmic opportunities. Sorensen cited studies from NERSC suggesting that a significant fraction of advanced workloads could benefit from quantum approaches, particularly in materials science, chemistry, and high-energy physics. Other studies map familiar HPC kernels, such as Fourier transforms, Monte Carlo methods, and linear solvers, to potential quantum techniques.

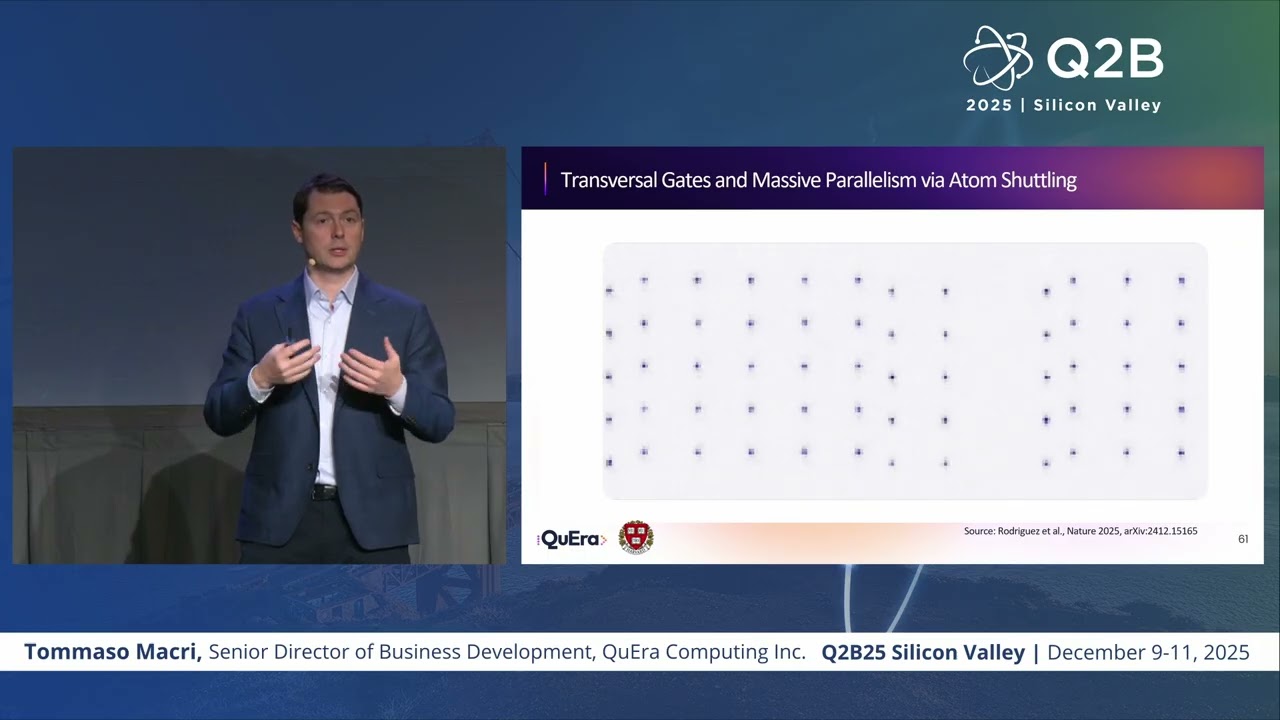

QuEra's view: The algorithmic landscape is more developed than many realize. Our Quantum Alliance partners, including companlies like Phasecraft are mapping specific application domains to neutral-atom implementations. The key insight is that problem structure matters. Our Field-Programmable Qubit Arrays allow the hardware geometry to match the problem geometry, which is particularly powerful for optimization and simulation workloads where graph structure is central.

Step 5: Assess Quantum Value Against Your Baseline

Next, assess quantum value against the classical baseline developed in Step 1. Compare performance, power, cost, staffing, and infrastructure impacts. Consider power density, cooling requirements, hiring challenges, software rewrites, and front-end classical hardware needs. Also consider the cost of doing nothing.

QuEra's view: This is where neutral atoms offer tangible advantages. Our systems operate at room temperature externally, eliminating dilution refrigerator complexity and the associated power, space, and maintenance burdens. When HPC centers evaluate integration requirements, the operational simplicity of neutral-atom technology is a significant factor. Total cost of ownership extends far beyond hardware price.

Step 6: Manage Expectations

Sorensen emphasized managing expectations. Quantum is not here today, but that gives organizations time to prepare. As he put it: "The sector is still the Wild West" with dozens of vendors and inevitable consolidation. Not all performance gains will be exponential, and benefits will be limited to certain applications. But even order-of-magnitude improvements can be valuable.

He noted the shift in industry perception: "The message has landed: quantum isn't ready today, but it's time to start preparing."

QuEra's view: We appreciate Sorensen's intellectual honesty here. The industry has suffered from overpromising. Our approach is different: analog quantum computing delivers value on specific problem classes today, while our roadmap to fault-tolerant digital computing addresses longer-term applications. The 2025 breakthroughs in error correction, including our demonstration of logical qubits, show the path is real. But we are clear about what works now versus what is coming.

Step 7: Vendor Selection

Eventually, organizations reach vendor selection. Sorensen's advice: "Focus on demonstrated performance gains on workloads you care about. Modality and qubit counts are secondary." Consider full-stack versus modular approaches, cloud versus on-premises, and partners with vertical-specific expertise.

He noted that cloud access has been essential to the sector's growth, but on-premises systems may become more important for expertise building and long-term utilization.

QuEra's view: We agree that demonstrated performance on relevant workloads matters more than headline metrics. This is why we invest in co-design engagements that prove value on customer-specific problems before procurement decisions. And we agree that on-premises deployment is growing in importance. Our AIST contract, and the Dell partnership all reflect demand for quantum infrastructure that organizations own and operate.

Step 8: Procurement and Beyond

The final steps involve procurement decisions: cloud versus on-premises, buying versus leasing, upgrade paths, and benchmarks. Sorensen emphasized: "HPC users care about benchmarks that reflect their real workloads. Quantum suppliers should be thinking the same way."

QuEra's view: The procurement landscape is evolving rapidly. Some organizations want cloud flexibility. Others require on-premises deployment for data sovereignty, hybrid algorithm latency, or strategic capability building. We offer both. Our participation in the DARPA Quantum Benchmarking Initiative reflects our commitment to rigorous, application-relevant performance validation. We welcome scrutiny on workloads that matter.

The Timeline Is Clearer Than You Think

Sorensen's data leads to a clear conclusion: "In 10 to 15 years, any serious HPC organization with complex workloads will likely be using some form of quantum computing." That may sound distant, but procurement cycles for major infrastructure span years. Organizations that begin preparation now will be positioned when the technology matures.

QuEra's view: Forward-thinking HPC centers, enterprise innovators and government organizations realize that the timeline is much shorter, and they are engaging with us today.

Conclusion

Bob Sorensen's framework provides a practical roadmap for HPC organizations navigating the quantum transition. The eight steps, from looking inward first to continuous assessment and iteration, reflect hard-won wisdom from decades of technology adoption in the HPC sector.

For us, the framework validates design decisions we made years ago: prioritizing integration over standalone performance, offering both cloud and on-premises deployment, building an ecosystem of partners with vertical expertise, and maintaining intellectual honesty about what works today versus what is coming.

The HPC sector is ready. The standing-room-only sessions at SC25 demonstrate that. The question is no longer whether quantum will become part of the advanced computing stack, but how organizations will prepare for that transition.

If you are beginning that preparation, we are here to help.

Interested in learning more about QuEra's approach to HPC integration? Contact us about our Premium Cloud Access, algorithm co-design services, or on-premises deployment options.

Watch the full presentation here:

.webp)